The term “cybercrime” pops up in the news on a regular basis, and yet most people are unable to define precisely what it means. If you were to ask colleagues in your own company – regardless of department, role or hierarchical position – to explain what they mean by cybercrime, their answers would vary wildly. This vagueness gives rise to four different scenarios, each of which could also be described as a dilemma. And yet permanent solutions exist for all four of them.

The first scenario is primarily characterised by ignorance, as embodied in two contrasting views of cybercrime and its impact (i.e. how those affected become targets for this criminally profitable business). The first is a vague, hazy idea of cybercrime; the second is a very specific, cut-and-dried understanding of cybercrime. Both views contrast with concepts such as internet or computer crime, which can be defined with considerable precision. People often conceive their own highly individual ideas of cybercrime, which unfortunately exclude many of the most egregious risks. Reflecting this tendency, Germans have simply borrowed the English term “Cybercrime” – perhaps because, as Petra Haferkorn1) suggests, it already sounds impressively threatening and thus avoids the need for further clarification.

The second scenario resides in the customary information gap between those who at first only have a hazy idea of the threat they face (such as senior managers who do not deal with the details of IT systems) and the experts (such as the IT department) capable of pinpointing the organisation’s weak points. To close this gap, the experts need considerable negotiating skills – first, to make themselves heard, and second, to impart vital information in clear, appealing ways. Building up an understanding of the subject under discussion is half the battle – but only half. The key issue is how corporate decision-makers subsequently assess the associated threat level, need for protection and safeguards required. To arrive at a balanced assessment, they must ask themselves how vulnerable the company is to attacks by cybercriminals. This in turn means asking which cybercriminals might be involved – are they opportunistic attackers bent on indiscriminately exploiting the weak points of any company, or well-prepared raiders intent on making a precisely targeted, carefully prepared intrusion attempt on an individual company’s IT systems? Which is more likely? What kinds of cybercriminals would find your company most attractive? And what kinds of information, data, processes or expertise could they be after? What kinds of damage could they cause – loss of data? Organisational disruption? Third-party claims for compensation? Is the company adequately prepared for all this? The answers to these key questions will suggest differing subjective views of the risks involved. This particular dilemma is best resolved by a cyber security check . As soon as senior management decides to discuss these and other issues with various in-house specialists, all those involved gain a clearer picture of the situation and a better idea of what to do about it. A kick-off event often helps to close the information gap between decision-makers and experts right at the start. By engaging in internal discussions of the best courses of action, including methods for assessing and steps for combating the top ten cyber risks within the organisation, the cyber security check itself becomes a shared

The third scenario is a decision-making dilemma. Are we willing to do everything necessary to fight cybercrime? Or shall we instead confine ourselves to a “bare minimum” approach? Whatever we decide, there is always a cost attached – either the price of the measures that need to be taken, or the cost of the potential risk. What characterises this scenario is decision-makers’ reluctance to engage with it. Following the old adage “what I don’t know won’t hurt me”, senior managers may like to think they can avoid liability for any damages to their own company – or to compromised contractual partners or customers – resulting from cyber risks incurred by the company. Unfortunately, this tricky cost-benefit dilemma is not one that can be resolved by relying on so-called “cyber policies” as insurance against such incidents. Quite the reverse: insurance companies attach stringent conditions to such policies, requiring companies to take all necessary risk identification, assessment and prevention measures before they can qualify for cover. Furthermore, insurance companies take a very cautious approach to the cyber insurance business, because cyber hazards are just too complex. And on that particular issue, senior managers and IT security practitioners can only agree.

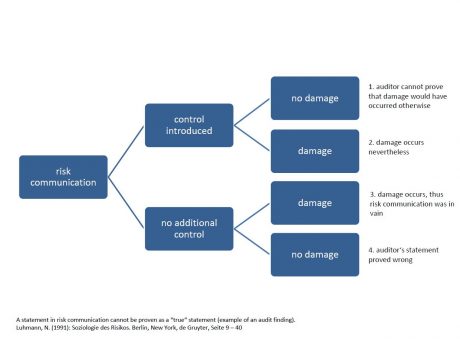

Paradoxically, the fourth scenario – the risk perception dilemma – offers a solution to the scenarios described previously. First, we must ask what exactly we perceive as a risk. The answer is – anything we expect to be a risk. But our expectations are based on the assumptions underlying our risk models. To build a model, we filter out irrelevant influencing factors and concentrate on the relevant ones. After all, risk models are useful precisely because they reduce complexity, enabling us to better understand and manage causal relationships and potential impacts. The problem with reducing complexity is not so much that certain factors are excluded as that subjective decisions must be made about which factors to exclude. Although our readers might disagree, we should point out that even a purportedly “objective” decision is, in reality, an intersubjectively verifiable agreement on criteria and premises. Thus any risk decision is inextricably associated with residual risks and blind spots. So what is the promised solution to this dilemma? In short, risk communication: all four of the scenarios described above (ignorance/expertise; information gap; decision costing; risk perception) can be resolved by risk communication. In her article “Risk communication from an audit team to the client” 1), Petra Haferkorn – who audits insurance industry risk models for Germany’s financial regulator BaFin – clearly explains the issues associated with making objective decisions:

Perceiving, assessing and deciding on risks should be regarded as a dynamic learning process. This does not mean that the problems simply disappear, but rather that they are gradually resolved over time. The concept of a cyber security practitioner is based on such a learning process. Starting with the senior management team, internal security managers launch an audit process during which they maintain an equitable, collegial relationship with all in-house personnel involved. The results of the audit are then fed back to the relevant business units and senior ma

nagers. If this process is repeated on a regular basis, those involved will gradually develop a mutual understanding of the perceived risks and how to contain them effectively. At the same time, and more or less as a matter of course, a culture of risk communication will emerge in which previously held beliefs are constantly re-examined and the status quo is regularly scrutinised for potential blind spots.

We would refer anybody who might be interested in an in-depth, theoretical exploration of risk communication to the book Die Politik der Krise – Soziologische Analysen zur Finanzkrise und ihre Konsequenzen (The Politics of Crisis – Sociological Analyses of the Financial Crisis and its Consequences) by Marco Jöstingmeier. Ultimately, what applies to financial risks also applies to cyber risks. In rapidly evolving situations, checklists, controls and regulations only help to a limited extent – it is essential to recognise that although they may claim to be universally valid, this in not in fact the case!

1) Petra Haferkorn, “Risk communication from an audit team to the client” in: Conference proceedings of the 2016 NetWork Workshop organised by the Fondation pour une Culture de Sécurité Industrielle (FONCSI) entitled “Risk communication in and for the real world – From persuasion to co-construction: reaching beyond an absolute trust in an absolute truth,” in the Navigating Industrial Safety SpringerBriefs series.

You can find all the author’s blog posts on topics such as cybercrime, compliance, security and white-collar crime here.

Stay up to date! Subscribe to our newsletter or find out more about the subjects we cover.