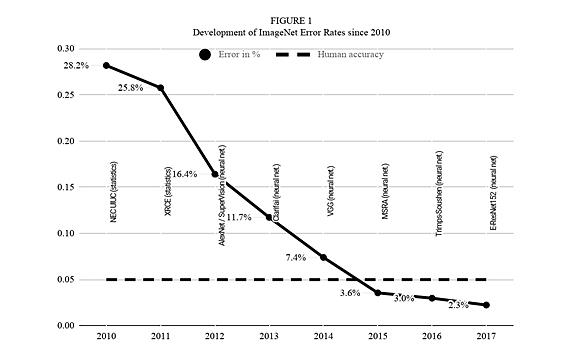

With the introduction of deep learning algorithms, machine learning has made rapid progress. An illustration of the progress is the error rate on the ImageNet dataset, where the task is to identify which of 20,000 possible categories an image shows.

In 2012, the introduction of AlexNet, a convolutional neural network, and the first deep learning algorithm in this area, lead to a drop in the error rate from 25.8% to 16.4%. Within 3 years the introduction of more advanced deep learning algorithms led to a decrease in the error to 2.3% which is better than the human error rate of 5%.

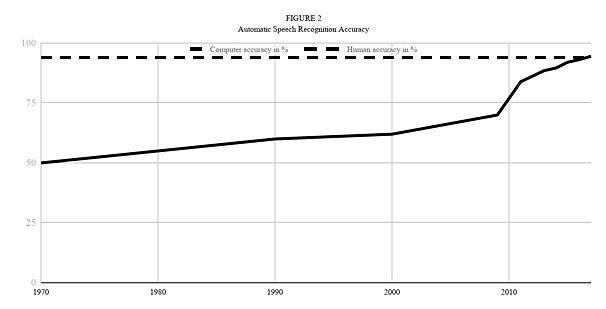

This improvement due to deep learning algorithms is even more surprising, as previous algorithms with elaborate handcrafted feature engineering had only made gradual progress over a course of more than 40 years for a number of tasks where deep learning has excelled.

One such example, automatic speech recognition, illustrated in figure 2, shows this slow progress, before and rapid development of accuracy after the introduction of deep learning in 2010.

Since 2012, deep learning architectures have surpassed human accuracy in a number of data-sets, including speech recognition, image classification and question and answering.

This has led to a “hype” in artificial intelligence that is used and sometimes abused by companies to sell their products. Some companies even claim that their products possess powers such as “cognitive intelligence”, which suggests that applications have something close to human cognitive capabilities.

However, progress in machine learning has to a significant extent been due to datafication, the collection of increasingly large data-sets in all areas of life. Another reason is the improvement in computing power, which has allowed the more complex deep learning algorithms to be trained on those large data-set. Algorithms and ways to handle their learning have also been improved, but even the most sophisticated algorithms have the logic of pattern detection at their heart, i.e. finding a complex relationship in a data-set.

If deep learning is mainly about extracting patterns from data; applications are feasible, where:

Use cases where 1-3 are present are likely to succeed, whilst the promise of higher order “congitive intelligence” is out of reach with current algorithms.

If you are seeking an in-depth understanding of and practical exposure to the latest AI technologies – especially Deep Learning – you are welcome to join the programme Certified Expert in Data Science and Artificial Intelligence. The course covers the theoretical foundations of statistical modelling, the detailed analysis of neural models – along with associated machine learning procedures – and includes a technical introduction to and practice in Python programming using the general “Data Science Stack” (Numpy, SciPy, Pandas, Scikit-Learn), as well as TensorFlow for Deep Learning.

In part two of this blog series we’ll give a slight insight of our projects we are working on in the field of Deep Learning.